|

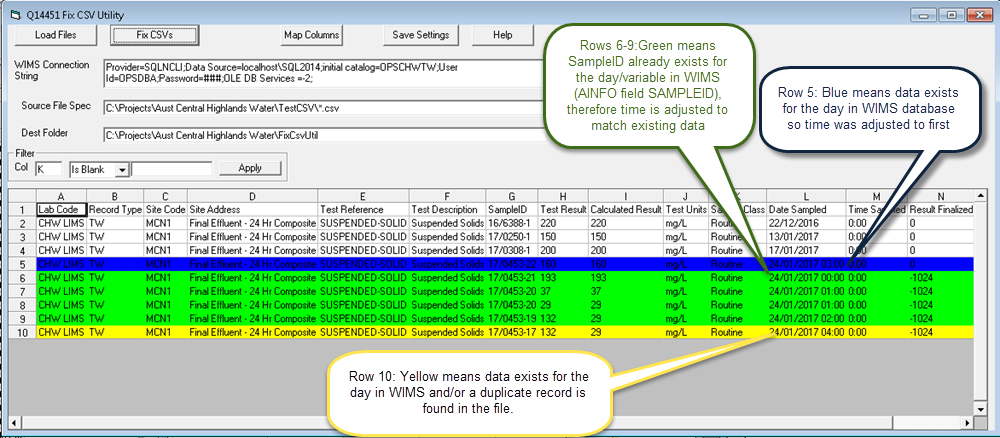

Q14451 Fix CSV Utility is intended to be a "preprocessor" of CSV files using the Q12351 LIMS CSV Interface. The Utility will scan the files and edit the Sample Date Time if duplicate records with a different Sample Id are found for the same location, analyte, and sample type.

Utility was developed for Central Highlands Water, Australia.

Example: The Sample Date field in the CSV file is ONLY the date (i.e. contains no time). The user takes 2 samples at a location and wants the data to go into an hourly variable as two records. The Utility will detect that the 2nd record is for the same date, location, analyte, and sample type and will add 1 hour to the Sample Date field. It will then save the CSV file and the Q12351 can import the data to the proper data slots.

Logic Description:

Step 1: Search WIMS database for existing data (AINFO Field SAMPLEID)

- Find the WIMS variable based on Location (Site Code) = Vardesc.LIMS_LOC, Analyte (Test Reference) = Vardesc.LIMS_TEST, and LIMS UD1 (Sample Class) = VARDESC.LIMS_UD1.

- For the variable / Sample Date search the additional Info field SAMPLEID = SAMPLEID in File. If the SampleID is found, set the date to the datetime (data slot) in WIMS for that SampleID.

Step 2: If Step 1 does not find a WIMS Record search WIMS for any existing records for the day.

- For the variable / Sample Date find the Maximum Datestamp in the additional info table.

SELECT MAX(DATESTAMP) FROM DATADDH_I WHERE VARID = 233 AND DATESTAMP >= '2017-01-24 03:00:00' AND DATESTAMP < '2017-01-25 03:00:00'

- If Max date found (i.e. data exists) add one hour to Max Date and assume that is the data slot for the row. Search the file above the current row and see if a row exists for the same variable/date with the time calculated. If so, add 1 hour.

Examples:

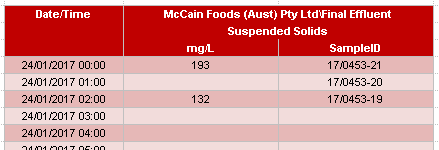

Existing data in WIMS before utility is run:

Row 5: SAMPLEID 17/0453-22. From the Site Code, Test Reference and Sample Class we find variable Var#467 (varid 233). Query Additional Info table for the day/SampleID.

SELECT DATESTAMP, SAMPLEID FROM DATADDH_I WHERE VARID = 233 AND DATESTAMP >= '2017-01-24 00:00:00' AND DATESTAMP < '2017-01-25 00:00:00' AND SAMPLEID='17/0453-22'

No records returned as that SampleID does not exist for the variable/day in the WIMS DATADDH_I table. Move to Step 2.

Find the Max DateStamp for the variable/sampledate

SELECT MAX(DATESTAMP) FROM DATADDH_I WHERE VARID = 233 AND DATESTAMP >= '2017-01-24 03:00:00' AND DATESTAMP < '2017-01-25 03:00:00'

Returns 24/01/2017 2:00:00 AM. So the first open slot is 24/01/2017 3:00:00 AM.

Next check rows 4 to 2 have data for that Date/Variable. If so, we would add one more hour, in this case no records are found and we set the time to 24/01/2017 3:00:00 AM and make the row blue.

Row 7: SAMPLEID '17/0453-20' Var#467 (varid 233). Query Additional Info table for the day/SampleID.

SELECT DATESTAMP, SAMPLEID FROM DATADDH_I WHERE VARID = 233 AND DATESTAMP >= '2017-01-24 00:00:00' AND DATESTAMP < '2017-01-25 00:00:00' AND SAMPLEID='17/0453-20'

Found record at 1AM. Set time to 24/01/2017 1:00 AM and make row green.

Row 10: SAMPLEID '17/0453-17 Var#467 (varid 233). Query Additional Info table for the day/SampleID.

SELECT DATESTAMP, SAMPLEID FROM DATADDH_I WHERE VARID = 233 AND DATESTAMP >= '2017-01-24 00:00:00' AND DATESTAMP < '2017-01-25 00:00:00' AND SAMPLEID='17/0453-22'

No records returned as that SampleID does not exist for the variable/day in the WIMS DATADDH_I table. Move to Step 2.

Find the Max DateStamp for the variable/sampledate

SELECT MAX(DATESTAMP) FROM DATADDH_I WHERE VARID = 233 AND DATESTAMP >= '2017-01-24 03:00:00' AND DATESTAMP < '2017-01-25 03:00:00'

Returns 24/01/2017 2:00:00 AM. So the first open slot is 24/01/2017 3:00:00 AM.

Next check rows 9 to 2 have data for that Date/Variable. We find a duplicate in row 5, so we add an hour to set the time to 24/01/2017 4:00:00 AM and make row yellow.

Setup:

Fill out the fields (described below), click [Map Columns] button to set which columns correspond to which fields in WIMS. Then click [Save Settings] and your settings will be saved to the config.ini file. Save Settings also saves the filter and the state of filtering (i.e. is a filter currently applied). As this utility is typically used as a scheduled task to autorun prior to the interface run, note that the filter will be applied during the autorun if saved in that state. We may need to install and setup the interface twice if the CSV files apply to more than 1 facility. In this case, setup a filter to only see records (rows) for one facility then install another copy of the interface and setup a filter for the second facility.

WIMS Connection String: A connection string to the WIMS database. NOTE: If using the OPSDBA username, set password to ###.

Source Folder: Folder and File specification for the source CSV files.

Dest Folder: The destination folder for the new CSV file (source files are combined into 1 csv file and placed in this folder).

Filter: Column to use to filter results. In this example we only want to look at Non-Routine so we set the filter to on Column I (Sample Type) equal to Non-Routine. Only rows displayed will be considered when adding variables.

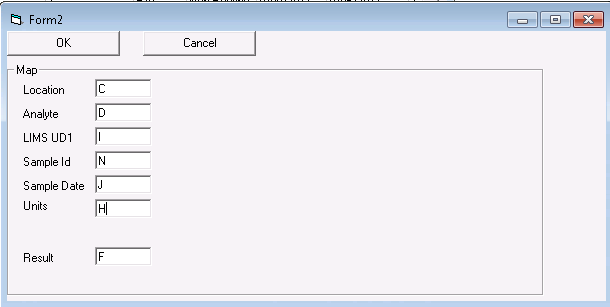

Map Columns:

Click the Map Columns button to setup which columns correspond to which fields in WIMS. Example, Column C in the CSV file holds the Location.

Location: Defines the sample location.

Analyte: Test or Analyte.

LIMS UD1: In this case is the Sample Type (Routine vs non-routine)

Sample ID: Lab Sample Number.

Sample Date: Date Sample was taken

Units: Not required, future.

Result: Not required, future

Using:

1. Click the [Load Files] button. It will load all files that meet the file specification in the Source Folder setting.

2. Click [Fix CSVs]. Will process and fix the Sample Dates. Source files are MOVED to the arc folder (created wherever the Q14451.exe is installed). The new file is placed in the Dest Folder and is named yyyy_mm_dd_hh_mm_ss.csv (e.g. 2017_06_14_09_25_29.csv).

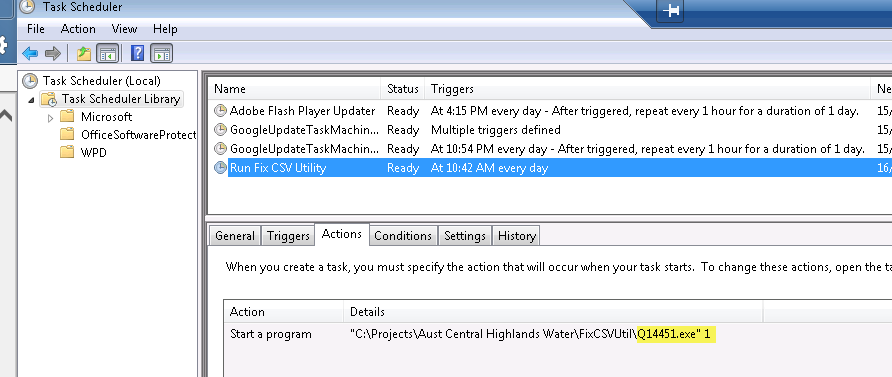

Auto Run:

If you launch Q14451.exe with a command line option it will Load the settings, load the files, Fix CSVs, and Exit the program. You can use the Windows Task Scheduler. Notice the 1 after the exe, this tells the utility to auto run.

Tech Notes:

Log files will be created for each run in the log folder. There is no log cleanup function as part of the utility, therefore users should clear the log folder as needed.

|